Research Projects

Here I attempt to summarize my research so far — like most research, this page is also a work in progress :)

My interest in how d/Deaf and hard of hearing individuals combine and move between different communication practices and technologies has drawn me to explore a variety of contexts in my research: speechreading in video calls, sign language dictionaries, captioning in multilingual media and conversations. Another thread in my research is how we might align our research with different communities' experiences and values — this work has resulted in calls for Deaf-led sign language AI research and intersectional accessibility research. I've also had the opportunity to collaborate on some wonderful teams, exploring accessibility across different contexts: generative AI, higher education, machine embroidery. You can find all of my research publications on my Google Scholar profile! Selected projects highlighted below:

Exploring Multilingual Captioning

Captions are a text representation of audio in media and real-time communication, supporting the communication needs of many disabled people. However, most research focuses on English captioning and the needs of multilingual disabled people are underexplored. To understand challenges and opportunities across different languages and in multilingual contexts, we conducted semi-structured interviews and diary logs with 13 participants who used multilingual captions for accessibility. We recount participants' experiences navigating access across multilple languages and present ways to orient captioning research to support multilingual futures and all ways of communicating.

Captions are a text representation of audio in media and real-time communication, supporting the communication needs of many disabled people. However, most research focuses on English captioning and the needs of multilingual disabled people are underexplored. To understand challenges and opportunities across different languages and in multilingual contexts, we conducted semi-structured interviews and diary logs with 13 participants who used multilingual captions for accessibility. We recount participants' experiences navigating access across multilple languages and present ways to orient captioning research to support multilingual futures and all ways of communicating.

Reframing Isolated Sign Recognition

Sign languages are used by 70 million people worldwide, but most information and communication resources are meant for written and spoken languages. Towards goals of language access and justice, ASL Citizen is a community curated isolated sign dataset of 2731 signs from American Sign Language. We propose this dataset (which contains over 83000 videos) be used for the task of video-based dictionary retrieval for ASL. We offer baseline models using state-of-the-art techniques, and define corresponding metrics for the task.

Sign languages are used by 70 million people worldwide, but most information and communication resources are meant for written and spoken languages. Towards goals of language access and justice, ASL Citizen is a community curated isolated sign dataset of 2731 signs from American Sign Language. We propose this dataset (which contains over 83000 videos) be used for the task of video-based dictionary retrieval for ASL. We offer baseline models using state-of-the-art techniques, and define corresponding metrics for the task.

This work was part of my summer 2022 internship at MSR!

ASL Citizen: A Community-Sourced Dataset for Advancing Isolated Sign Language Recognition [NeurIPS '23]Supporting Speechreading

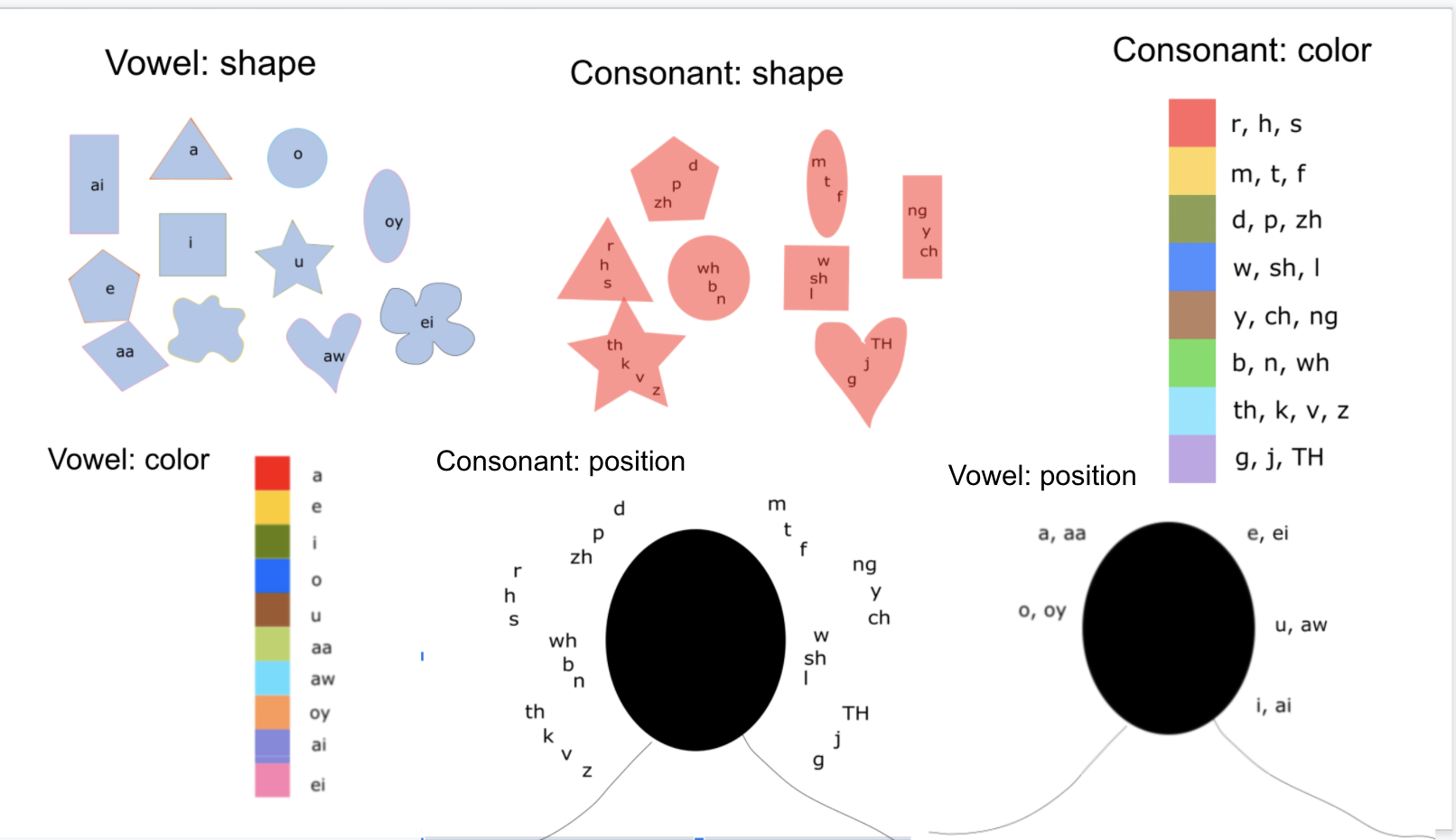

Speechreading is the art of using visual and contextual cues in the environment to support listening. Often used by d/Deaf and Hard-of-Hearing (d/DHH) individuals, it highlights nuances of rich communication. However, lived experiences of speechreaders are underdocumented in the literature, and the impact of online environment and interaction of captioning with speechreading has not been explored. To bridge these gaps, we conducted a three-part study consisting of formative interviews, design probes and design sessions with 12 d/DHH individuals who speechread.

Speechreading is the art of using visual and contextual cues in the environment to support listening. Often used by d/Deaf and Hard-of-Hearing (d/DHH) individuals, it highlights nuances of rich communication. However, lived experiences of speechreaders are underdocumented in the literature, and the impact of online environment and interaction of captioning with speechreading has not been explored. To bridge these gaps, we conducted a three-part study consisting of formative interviews, design probes and design sessions with 12 d/DHH individuals who speechread.